Many network deployments don’t fail because the wrong equipment was chosen or because something was configured incorrectly. They fail because real-world production environments behave nothing like labs, even when the same hardware and settings are used.

In a lab, everything is predictable. Power is clean, cables are short, temperatures are controlled and network traffic patterns behave the way you expect them to. In production, none of those assumptions holds for very long.

For system integrators, network engineers, and IT infrastructure teams, this gap between datasheet specifications and real-world performance is one of the most common causes of post-deployment issues.

The False Confidence of Lab Testing

Lab environments are designed to remove uncertainty. Typically, you’re working with:

- Stable, clean power

- Minimal or no wireless interference

- Short, high-quality cable runs

- Controlled temperatures

- Predictable network-traffic patterns

When a network design works perfectly under these conditions, it feels proven. But the reality is simple: lab testing only proves that a design can work, not that it will keep working once deployed in a live production environment.

Production sites break these assumptions almost immediately.

What Changes in Deployment Environments

1. Power Stops Being “Clean.”

In real deployments, power quality is rarely ideal. It’s often shared with non-IT equipment, poorly grounded or affected by frequent voltage fluctuations. UPS systems may be undersized or already past their best days.

The result isn’t always dramatic failure. Instead, you get things like:

- Random reboots

- Inconsistent Power over Ethernet (PoE) delivery

- Devices behaving differently depending on the time of day

These issues are frustrating because they’re hard to reproduce in a lab. Everything looks fine until it isn’t.

2. Wireless Interference Doesn’t Stay Still

Wireless network designs are usually validated during site surveys or early testing, when the radio frequency (RF) environment looks manageable.

But production environments evolve. New neighboring networks appear. Temporary access points quietly become permanent. Users introduce their own routers or IoT devices. Airtime slowly disappears.

What looked like “good coverage” during testing can degrade over time, even though signal strength still looks acceptable on paper.

3. Cabling Rarely Matches the Diagram

Cabling is one of the most underestimated risks in real networks.

In practice, cable runs are often longer than planned. Different cable categories (Cat5e, Cat6, Cat6A) get mixed. Terminations vary in quality. Labels go missing or were never added in the first place.

These issues don’t usually cause immediate failure. Instead, they show up months later as slow links, intermittent packet loss, or unstable PoE. By then, troubleshooting becomes painful, and the integrator often gets the call back.

4. Environmental Stress Adds Up

Labs avoid heat, dust, and humidity. Production network sites do not.

Poor ventilation, cramped enclosures, dust buildup and high temperatures slowly take their toll. Devices may stay online, but performance degrades, components throttle and failures become unpredictable.

Because this happens gradually, it’s easy to miss during commissioning and hard to explain later.

5. Users Ignore the Design Assumptions

If there’s one constant thing in production networks, it’s that users will do things you didn’t plan for.

They connect more devices than expected. They generate bursty, unpredictable traffic. They use applications that were never mentioned during design discussions. And sometimes, they plug in their own switches, routers or access points.

Lab traffic models can’t fully simulate this behavior. Designs that work perfectly for “expected usage” often struggle once real users take over.

The Design–Integration Gap

Most post-deployment network problems aren’t caused by bad designs; they’re caused by optimistic assumptions.

Common gaps include:

- Assuming perfect installation

- Designing for minimum requirements instead of operational tolerance

- Limited validation beyond basic acceptance testing

- Documentation that explains what was installed, but not why

When issues appear later, the reasoning behind key decisions is often lost, making troubleshooting and handover harder than it should be.

How Experienced Integrators Plan for Reality

Integrators with real field experience design differently. They assume things will go wrong.

They expect imperfect power, RF noise, and cabling inconsistencies. They validate assumptions on site using proof-of-concept (PoC) deployments. They design with buffers, not bare minimums. And they document trade-offs clearly.

This approach doesn’t eliminate problems, but it significantly reduces surprises.

Deploying Networks That Actually Hold Up

Many of the issues discussed here only become visible after deployment, when fixing them is expensive, and reputations are on the line. Identifying these risks early makes a real difference.

At Optace Networks, we work with system integrators to implement proof-of-concept deployments that validate equipment performance in real environments. This approach helps reduce deployment risk before rolling out at scale. We invite you to collaborate with us as you plan and execute reliable, scalable and resilient network deployments.

Latest Articles

Thu Feb 12 2026

Why “Works in the Lab” Often Fails in Production Networks.Fri Jan 09 2026

Managing Modern Networks with cnMaestro X: A Unified Approach for IT Teams, Business Leaders, and MSPsTue Dec 09 2025

Why MikroTik Should Be in Your 2026 Network PlanRelated Articles

.jpg)

ePMP 3000 - 5X Performance with Gen3 Technology

-(1).png)

Africa Tech Festival Displays Strides in Connectivity and Telecommunications Infrastructure across Africa

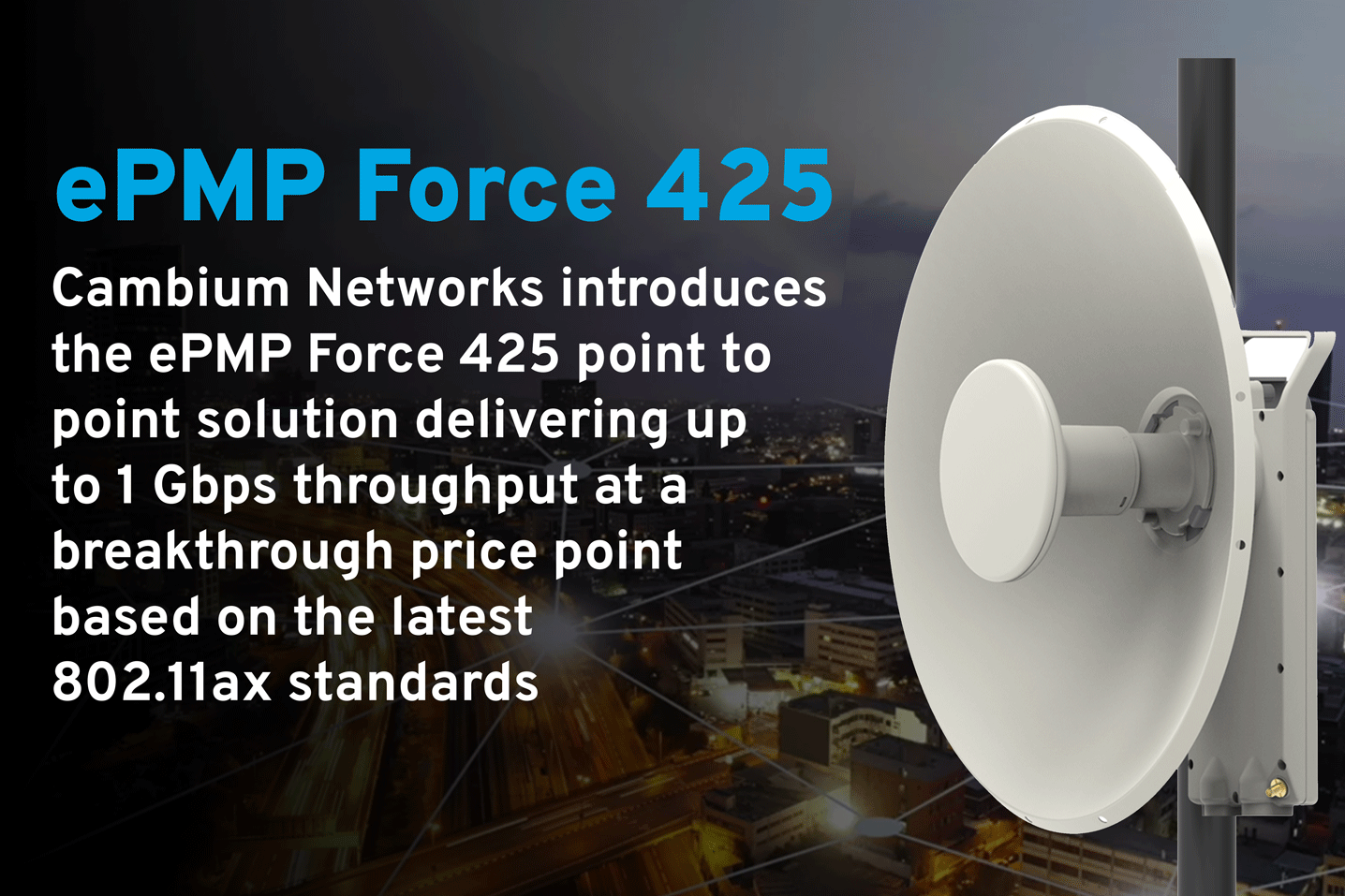

Cambium Networks ePMP Force 425 - The Industry’s First Point-to-Point Solution Based on 802.11ax

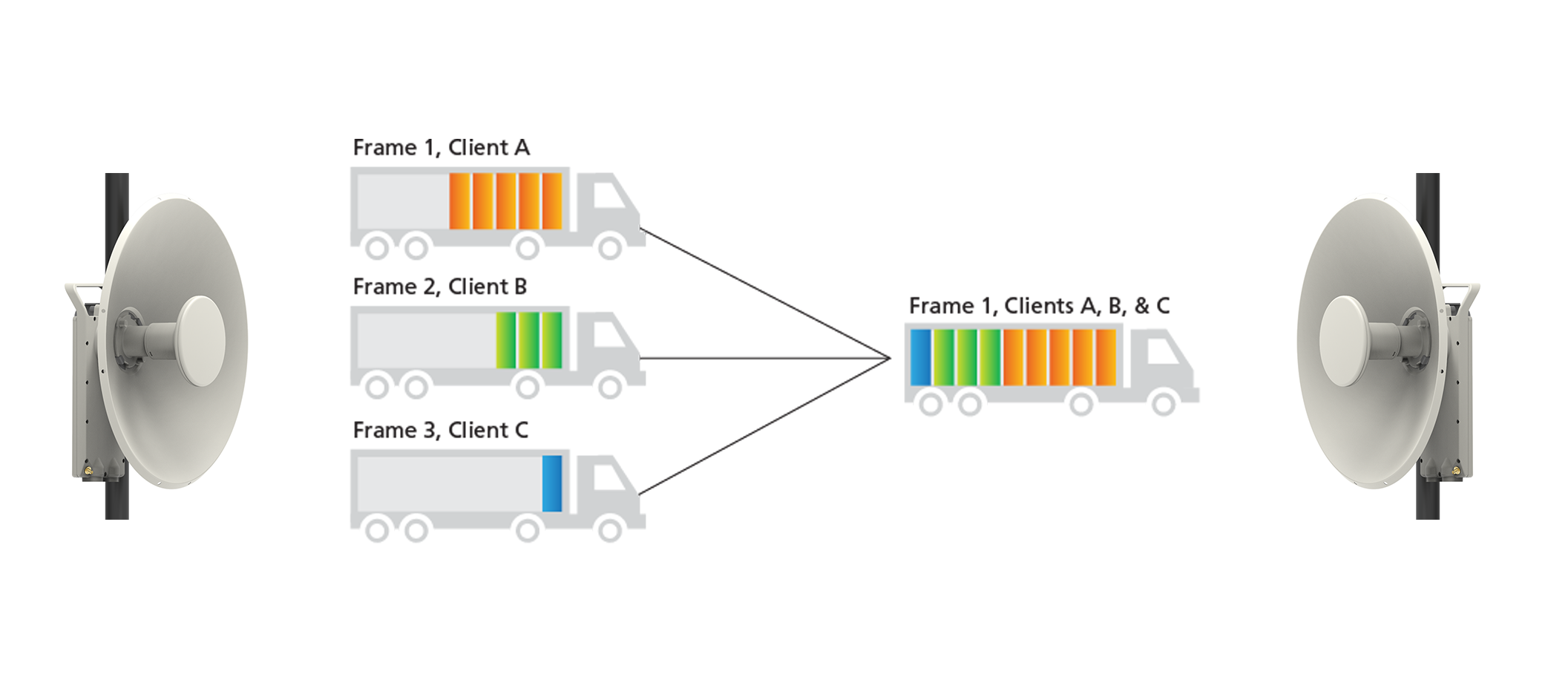

The Power of OFDMA in Wireless Broadband

In this article, we delve into the principles of OFDMA, the defining principle of the 802.11ax standard, its applications, and its impact on wireless broadband.

© 2026 PoweredbyOptace Networks Limited. All Rights Reserved.